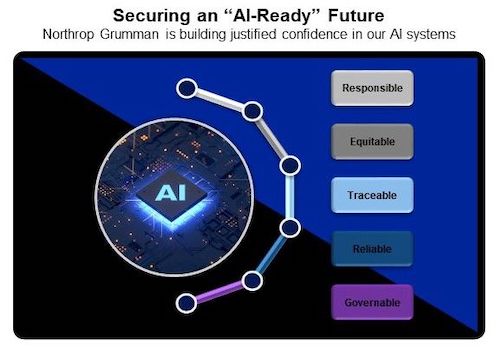

Northrop Grumman is working to establish ‘justified confidence’ in Artificial Intelligence (AI) systems by aligning AI development with the U.S. Department of Defense’s five AI ethical principles to ensure that platforms are accountable, robust and reliable.

Northrop Grumman is taking a systems engineering approach to AI development and is a conduit for pulling in university research, commercial best practices and government expertise and oversight.

One of the company’s partners is a Silicon Valley startup, Credo AI. They are sharing their governance tools as Northrop Grumman apply comprehensive, relevant ethical AI policies to guide in its AI development.

The company is also working with universities like Carnegie Mellon to develop new secure and ethical AI best practices, in addition to collaborating with leading commercial companies to advance AI technology.

Another step the company is taking is to extend its DevSecOps process to automate and document best practices with regard to AI software development, monitoring, testing and deployment.

Critical to success is Northrop Grumman’s AI workforce – because knowing how to develop AI technology is just one piece of the complex mosaic. Its AI engineers also understand the mission implications of the technology they develop to ensure operational effectiveness of AI systems in its intended mission space. That’s why the company continues to invest in a mission-focused AI workforce through formal training, mentoring and apprenticeship programs.

Northrop Grumman’s secure DevSecOps practices and mission-focused employee training helps to ensure appropriate use of judgment and care in responsible AI development.

The company strives for equitable algorithms and minimize the potential for unintended bias by leveraging a diverse engineering team and testing for data bias using commercial best practices, among other monitoring techniques.

Northrop Grumman developed tools to provide an immutable log of data provenance, ensuring traceable, transparent, and auditable development processes.

The company enables reliability through an emphasis on mission understanding to develop explicit, well-defined cases in which its AI systems will operate. Leveraging best practices, its work in AI governance enables robust risk assessment, algorithmic transparency and graceful termination when required.

This integrated approach from development to operation is essential to achieving justified confidence in AI-enabled systems.